A practice-based understanding of artificial intelligence as a conventional tool with unconventional costs

When Navigating.art began adding AI features to its software, the project didn't go as planned. But as the nonprofit worked through its missteps, it discovered something useful: AI tools operate under the same constraints and potentials as conventional software, just with one significant amplification. The gap between how AI products are marketed and how they function affects everyone who uses them. Understanding that gap and recognizing that AI features face the same fundamental trade-offs as any other software helps to explain why some tools work well while others disappoint, regardless of how sophisticated the technology claims to be. The one differentiating factor is the comprehensive cost. The full investment required to make any AI feature actually work for real users is higher at every step of the development and deployment process. The lessons from Navigating.art apply beyond AI, revealing why all types of digital tools succeed or fail, and what drives the decisions about which features get developed and which get abandoned.

The conventional side of AI products

Normal development practices apply

At Navigating.art, we learned this lesson through direct experience. When our team introduced an AI-based chatbot to help researchers query digitized publications, we initially saw it as innovative technology requiring special treatment. The chatbot was technically elegant. It converted text into vector embeddings, performed semantic search, and returned answers with page citations. However, it failed where conventional software development wisdom would have predicted: we didn't adequately research user needs before building it. Art historians who uploaded publications to the platform had little reason to query them conversationally; they'd already read and analyzed these texts extensively during their research process.

Moreover, the chatbot's citations were often incorrect. While prompted to reference specific pages, the system sometimes synthesized information from multiple locations or drew on its training data rather than the source document alone. For scholars whose work depends on verifiable evidence, this wasn't just inconvenient—it violated fundamental disciplinary standards.

What we learned is that AI features require the same rigorous development practices as any other software: clear problem definition, user research, iterative testing, and transparent communication. The AI component didn't exempt us from these fundamentals; if anything, it made them more critical. When we later began developing an image recognition feature, we approached it deliberately, with extensive user consultation and cross-disciplinary collaboration from the start.

AI isn’t a monolith: Understanding real and nuanced technological capabilities

The success or failure of AI-based products reflects their design and implementation, not an inherent quality of AI itself. A well-designed AI tool can effectively support specific tasks, while a poorly designed one can mislead users or waste resources. This is true of all software.

In art historical research, AI tools work best when they enhance rather than replace human expertise. They can accelerate metadata tagging, surface patterns across large image collections, or flag potential duplicates in archives. But, like all digital tools, they struggle with interpretation, attribution based on context rather than visual similarity, and understanding the cultural or historical significance of artworks.

AI often refers to computer techniques that find patterns in data and make predictions or suggestions based on those patterns. Most modern AI learns from large datasets rather than following fixed rules, which is why it can seem conversational or creative. But it doesn't actually understand or think. It processes patterns through mathematics and statistics. Recognizing AI as a sophisticated pattern-matching tool, rather than something fundamentally magical or intelligent, makes its limitations and costs easier to understand.

The philosopher John Searle's famous Chinese Room argument illustrates this distinction: programmatic symbol manipulation is fundamentally different from phenomenal experience. A person in a room following rules to manipulate Chinese characters might produce coherent responses without understanding Chinese at all. Similarly, AI systems process patterns without conscious understanding. This foundational philosophical insight reminds us that computation does not equal conscious understanding.

What we often call AI “hallucinations” are more accurately described as misalignments or errors in pattern prediction. A comprehensive survey on hallucination in large language models by Huang et al. explains that these outputs result from statistical processes, not consciousness. When an AI system produces incorrect information, it's not inventing fantasies the way a conscious being might; it's following probabilistic patterns in its training data that happen to lead to incorrect outputs. These limitations aren't temporary bugs to be fixed. Instead, they reflect the fundamental difference between pattern recognition and understanding.

AI can help: Automation and new opportunities for engagement

When well-designed and appropriately deployed, AI tools can meaningfully reduce workload. A 2024 meta-analysis by Chen et al. examining human-AI collaboration in image interpretation found that these partnerships can reduce time requirements while maintaining or improving accuracy, but only when systems are carefully designed with clear interfaces, appropriate training, and a realistic scope.

For under-resourced cultural heritage institutions, this potential matters. A small museum with limited staff might use AI tools to generate preliminary catalogue descriptions that humans then refine, or to organize large digital collections into initial groupings that curators review and correct. These applications don't replace expertise; they create space for expertise to be applied more strategically.

Beyond efficiency gains, AI can enable new forms of engagement with cultural heritage. Interactive systems might allow museum visitors to ask questions about artworks in their own language and receive contextualized responses. Researchers might explore visual connections across collections worldwide. Educators might generate customized learning materials adapted to different knowledge levels. These possibilities are genuine. But they require careful implementation that respects the complexity of art historical knowledge, acknowledges the interpretive nature of the discipline, and maintains transparency about what AI systems can and cannot do.

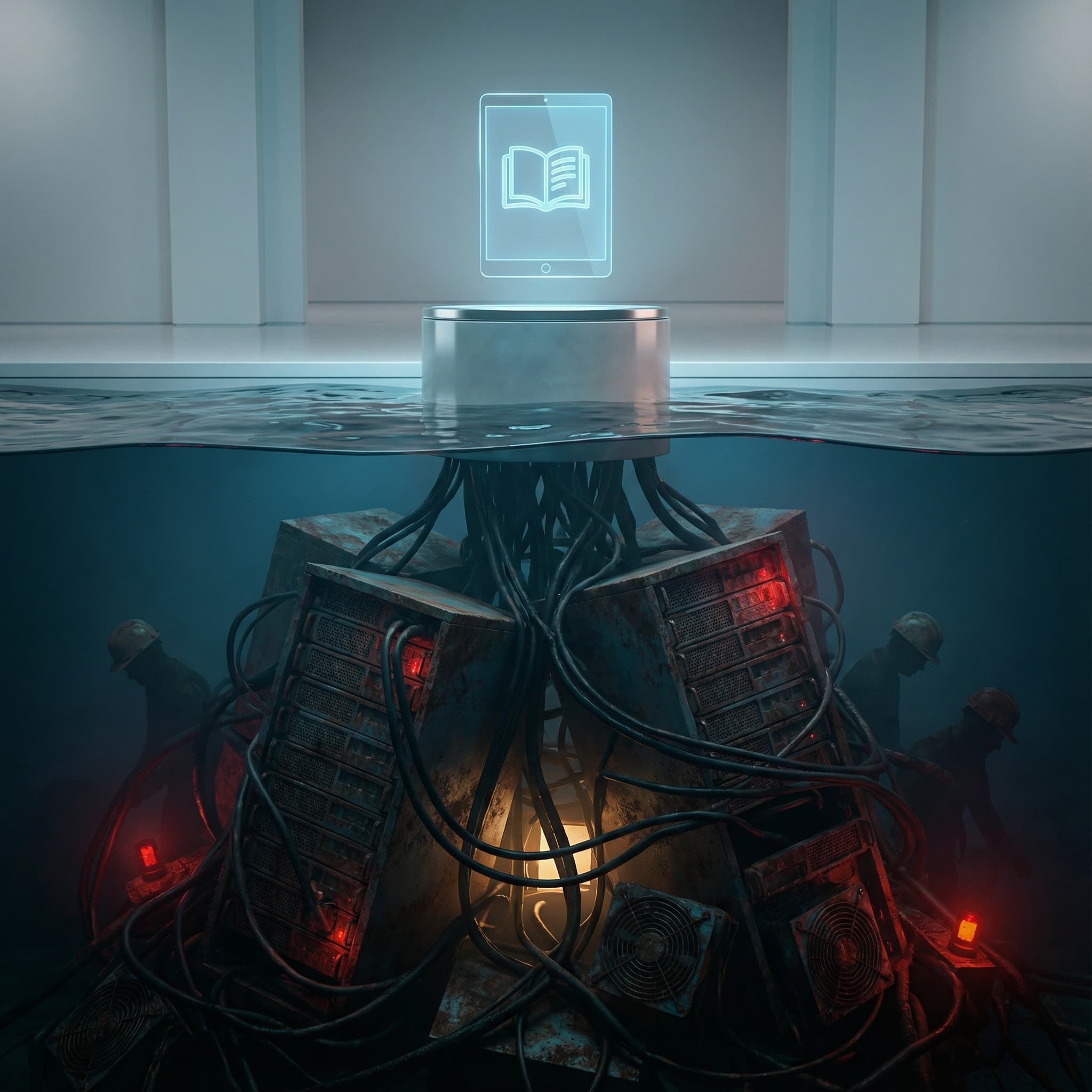

The unconventional costs

While AI features follow conventional development practices, they carry distinctive costs. AI systems depend on invisible labor, including data labelers, content moderators, and quality evaluators working under precarious conditions. Environmental costs are also substantial. Strubell, Ganesh, and McCallum quantified the carbon footprint of training major natural language processing models as comparable to the lifetime emissions of several cars. The OECD's 2022 assessment reveals that hardware production, operational energy, and electronic waste compound these impacts. Our chatbot's queries, though individually small, accumulated significant resource use.

The training data that feeds most AI products also raises privacy and copyright concerns. Gebru et al.'s “Datasheets for Datasets” advocates for transparent documentation of data provenance and consent, but recent legal scholarship reveals complex tensions between text and data mining practices, fair use doctrines, and creator rights. Mary Gray and Siddharth Suri's Ghost Work, Kate Crawford’s Atlas of AI, and Karen Hao’s Empire of AI document the environmental damages, privacy issues, and the global workforce facing psychological stress and uncertain income while powering AI systems. While the benefits of well-designed tools deploying AI techniques are substantial — just as other digital technologies have been in the past — their unique impact on people and places cannot be overlooked.

Balancing impact and cost through technological development

AI technologies aren’t going anywhere. National and international regulations or directions won’t appear immediately to curb the costs accrued by their development and deployment. The question currently remains how to engage with them thoughtfully. Understanding AI as a conventional tool with unconventional costs means applying established software development practices while confronting labor exploitation, environmental impact, privacy implications, and copyright questions. For cultural heritage professionals, this means building AI tools with attention to disciplinary standards and ethical practices that bring a huge positive impact to offset the costs. It means using AI to augment human expertise rather than replace it, maintaining space for interpretation and deeper engagement. These aren’t impossible standards to achieve, but, for Navigating.art, they did require missteps, humility, and a dedication to growth.

Working together with

.png)